The requirement for recurring job execution is quite common in Microsoft Dynamics implementations. Here are some of the business requirements I have encountered:

- Send monthly newsletter to target customers

- Synchronize MSCRM Users details with Active Directory once a day

- Once a month, disqualify all leads that have no open activities

- Once every hour, export Appointments from mail server and import into Dynamics 365

Microsoft Dynamics 365 has no reliable built in scheduling mechanism that can be leveraged for custom solutions. The Asynchronous Batch Process Pattern I have written about in the past can be used with daily recurring jobs but when it comes to hours resolution and less, it becomes unsteady.

Azure Scheduler is a reliable service that can run jobs in or out of Azure on a predefined schedule, multiple times or just once. So why not harness this mechanism to schedule Microsoft Dynamics 365 recurring jobs?

In this post, I’ll demonstrate how to use Azure Scheduler to execute a recurring job in Microsoft Dynamics 365.

Sample business requirement

Each day, automatically email a birthday greeting to contacts whose birthday occurs on that same day.

Implementation Details

Here are the solution main components:

- Custom Action dyn_SendBirthdayGreetings: activates a Custom Workflow Activity SendBirthdayGreeting which Retrieve all relevant Contact records by birthdate, creates and sends an email for each contact record.

- Azure Function BirthdayGreetingsFunction: invokes the dyn_SendBirthdayGreetings Custom Action via Microsoft Dynamics 365 API.

- Azure Scheduler BirthdayGreetingsSchduler: set to execute once a day at 9:00 for unlimited occurrences and invokes the BirthdayGreetingsFunction Azure Function

Architectural Notes

Why using Custom Action? Although it is possible to manage the required business logic in Azure Function, Dynamics related business logic should reside within Dynamics, managed and packaged in a Solution. This way, the Scheduling and Executing components are kept agnostic and isolated as possible and therefore easily maintained.

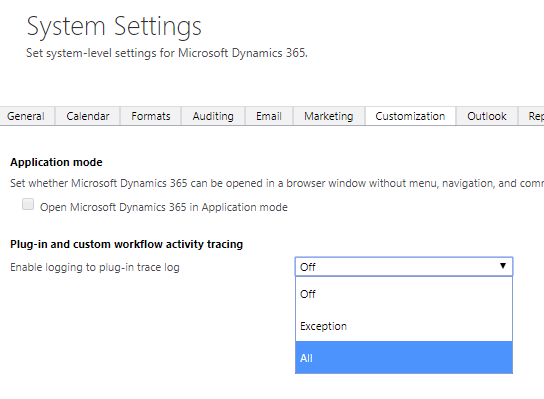

Having said that, you should be aware of the Sandbox Execution Timeout Limitation and consider using Custom Workflow Activity after assessing the business logic at hand.

Implementation Steps:

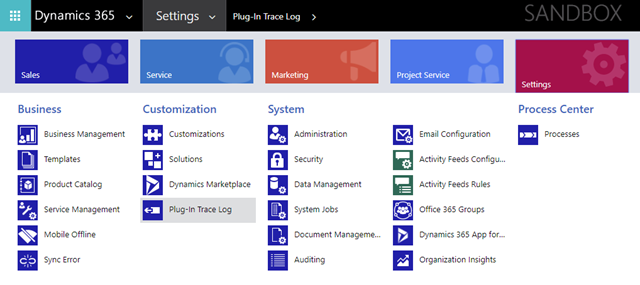

- Define dyn_SendBirthdayGreetings Custom Action

Download, import and publish the unmanaged BirthdayGreetingAutomationSample_1_0_0_0 solution.

It contains a Custom Action called dyn_sendBirthdayGreeting which will be later called from the BirthdayGreetingsFunction Azure Function.

By default, the Custom Action will create a birthday greeting email but will not send it. To change this, disable the Custom Workflow Activity, edit the only step and change the Send greeting email after creation? property value to true. Note that this may actually send email to your contacts.

The SendBirthdayGreeting Custom Workflow Activity code can be found here. -

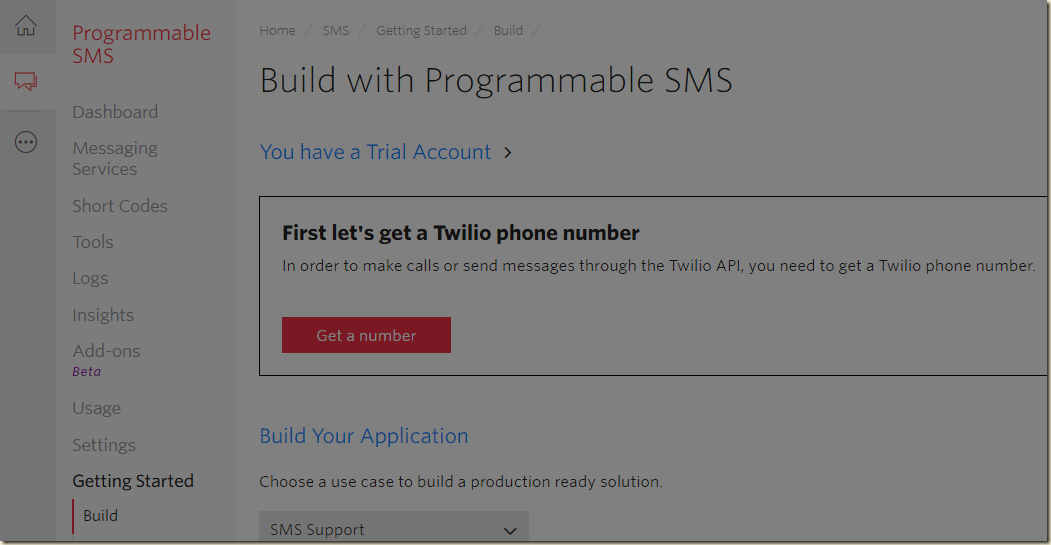

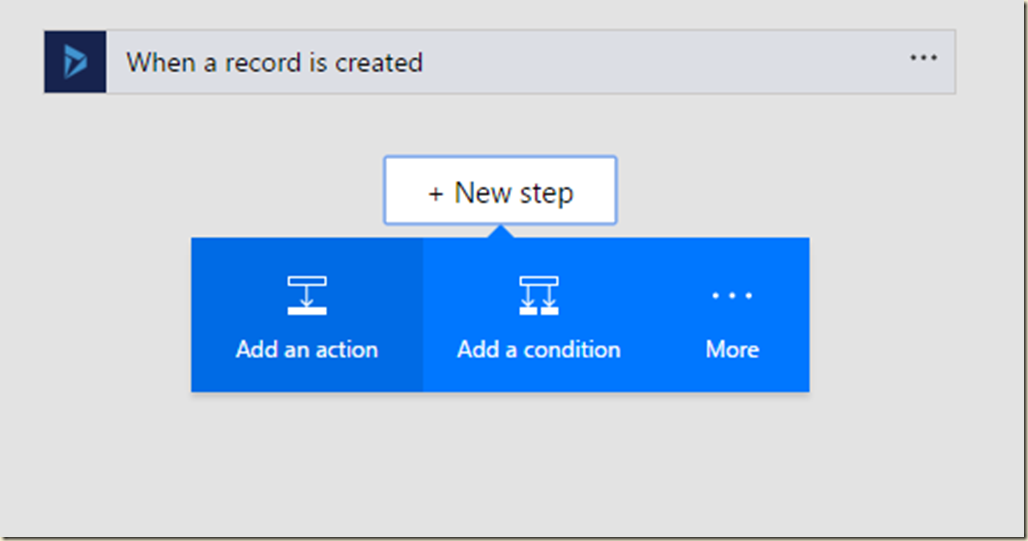

Define BirthdayGreetingsFunction Azure Function

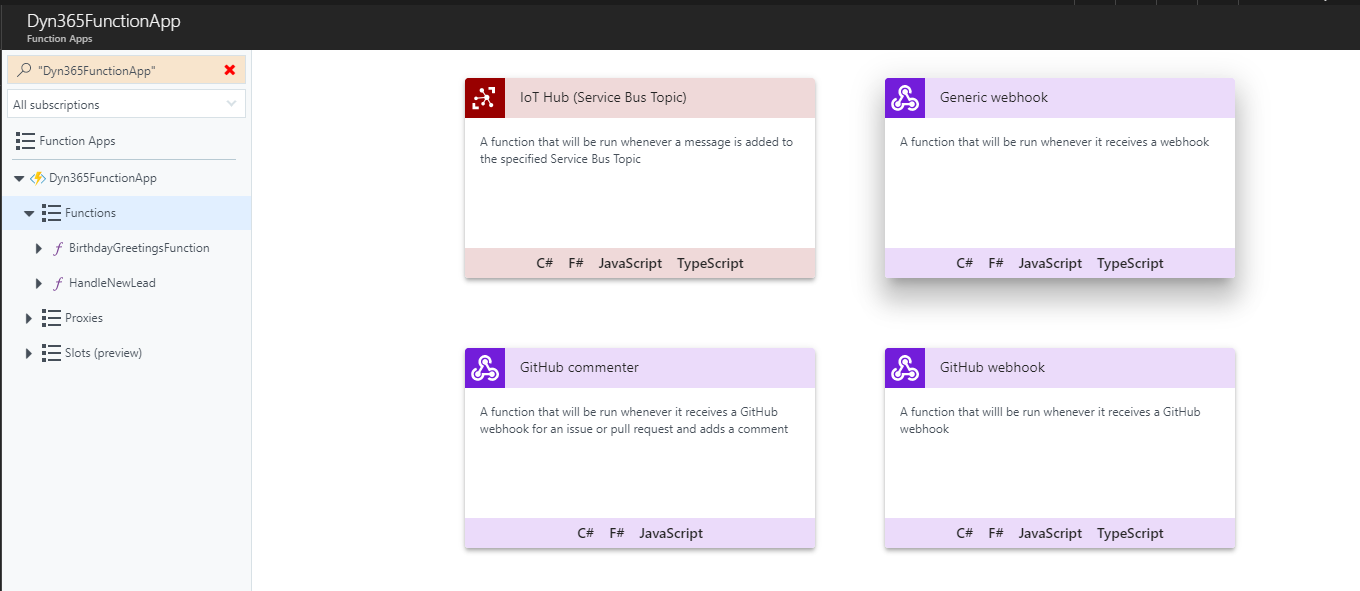

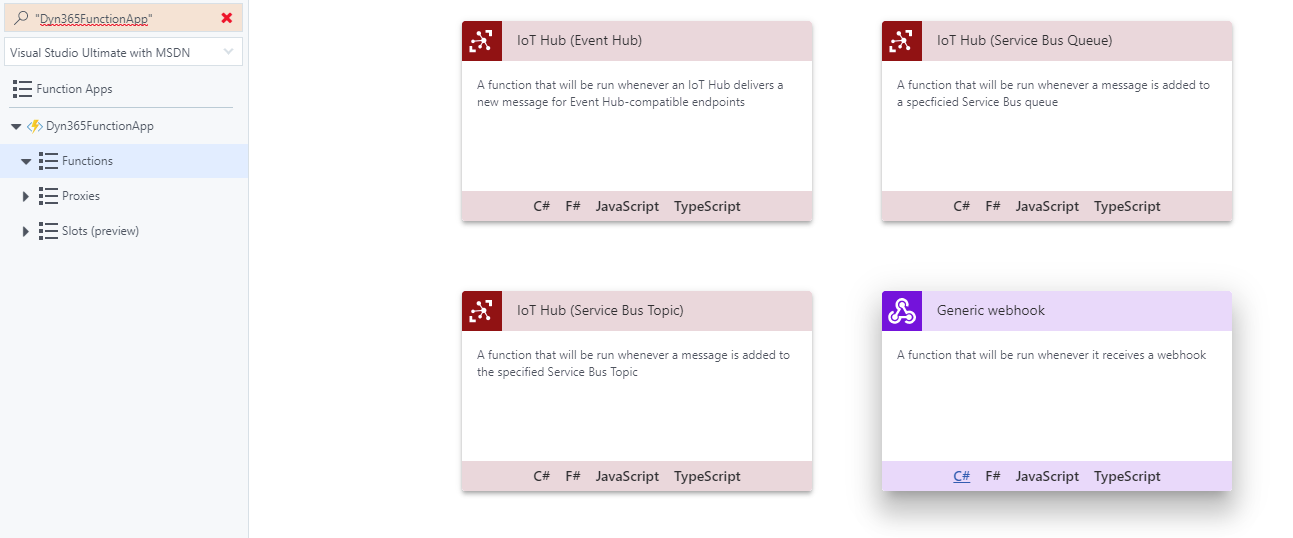

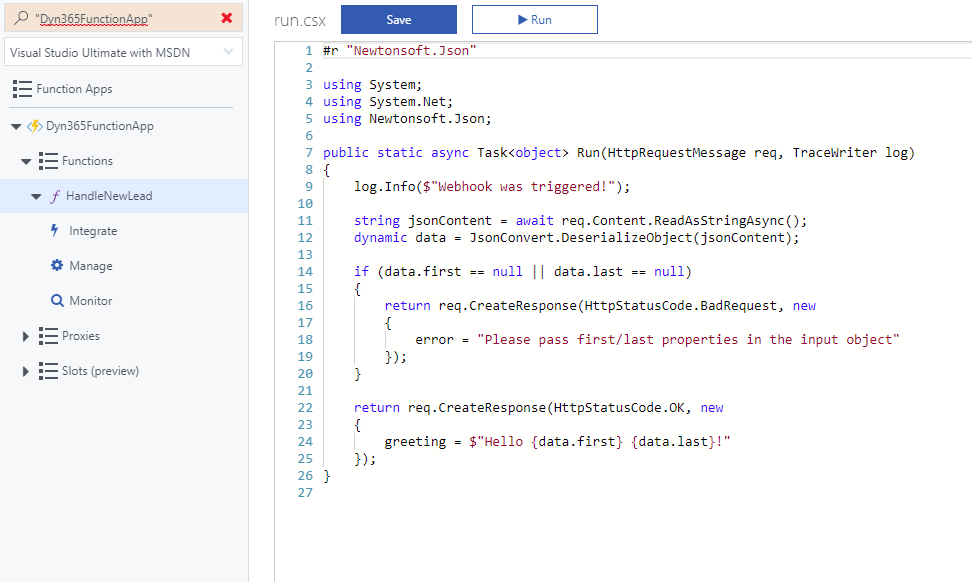

After creating a Function App (follow the first 3 steps here), create a new C# Function of type Generic webhook under your Function App functions collection, name it SendBirthdayGreetingFunction

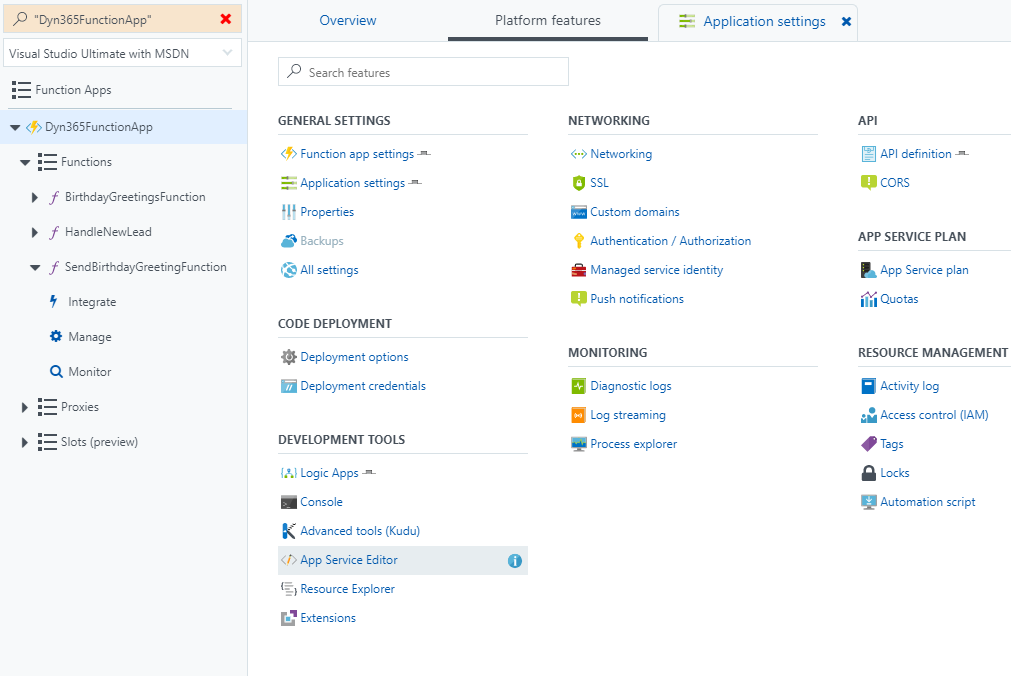

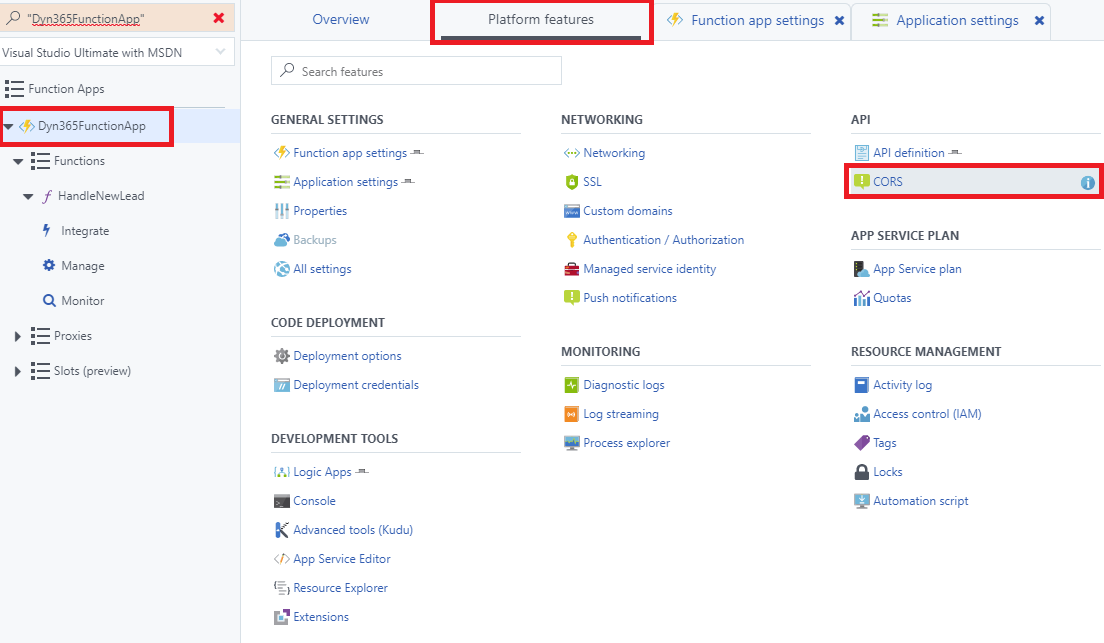

Click the App Service Editor option in the Application Settings tabAdd a new file under your Function root, name it project.json. Copy the following JSON snippet into the text editor

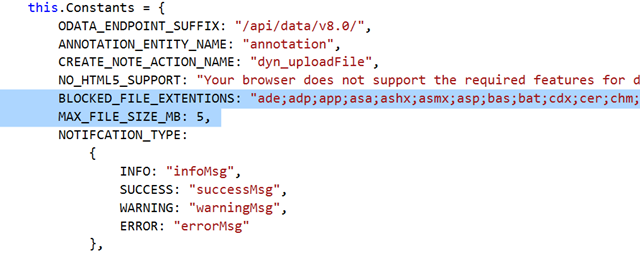

{ "frameworks": { "net46": { "dependencies": { "Microsoft.CrmSdk.CoreAssemblies": "9.0.0.7", "Microsoft.CrmSdk.XrmTooling.CoreAssembly": "9.0.0.7" } } } }Close the App Service Editor to get back to your function. Selecting your Function App, click the Application settings tab.

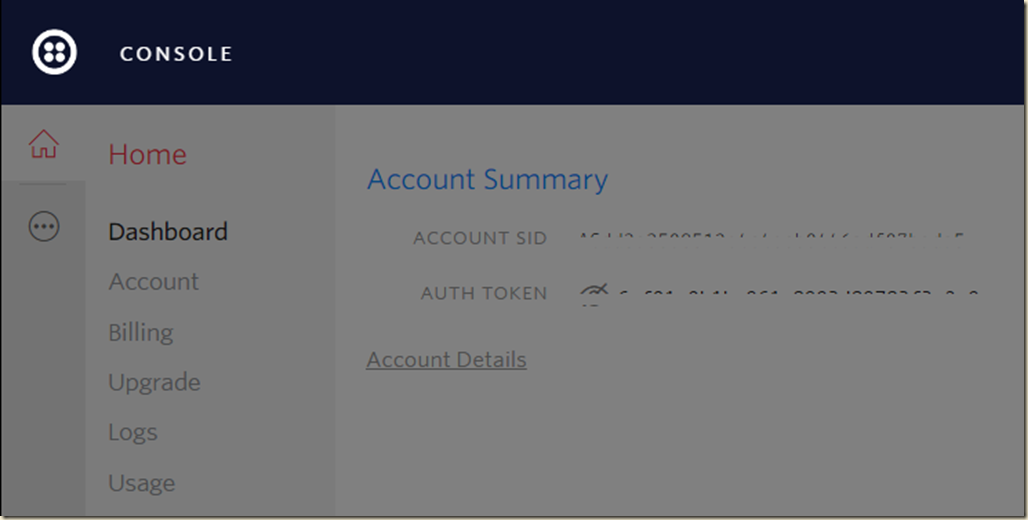

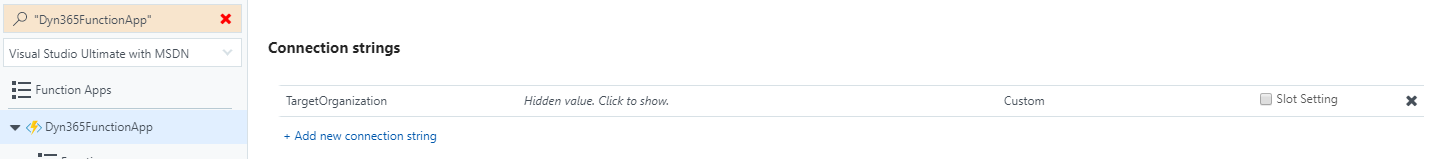

Scroll down to the Connection strings area and add a new Connection string named TargetOrganization. This will be used to connect and authenticate to your Microsoft Dynamics 365 Organization.

For the connection string value, set your organization details in the following format:AuthType=Office365;Username=XXX@ORGNAME.onmicrosoft.com;Password=PASSWORD;Url=https://ORGNAME.crm.dynamics.com

Note the data center targeted, crm.dynamics.com is targeting an organization in North America data center.

Click Save to save the newly created Connection String.

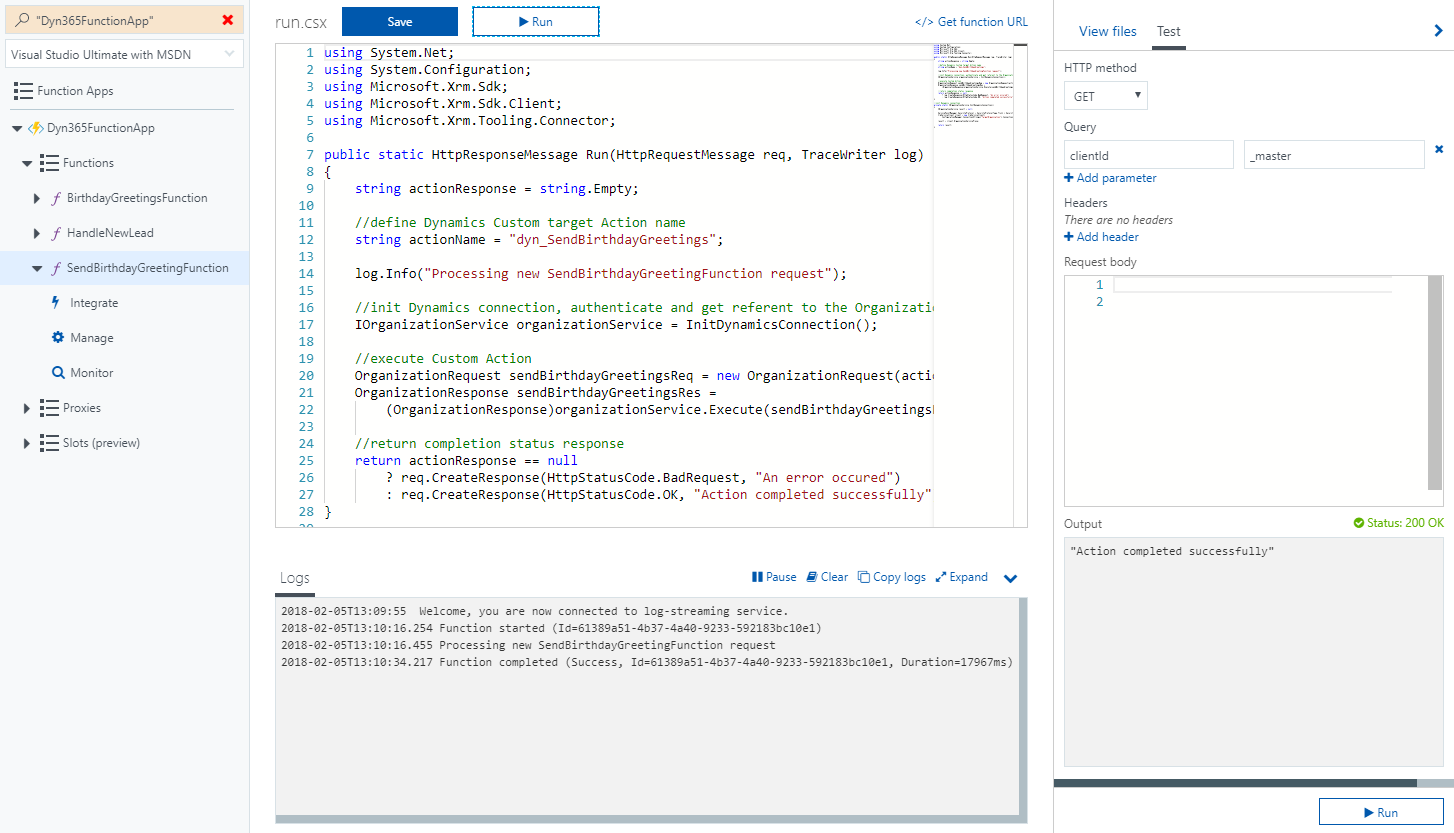

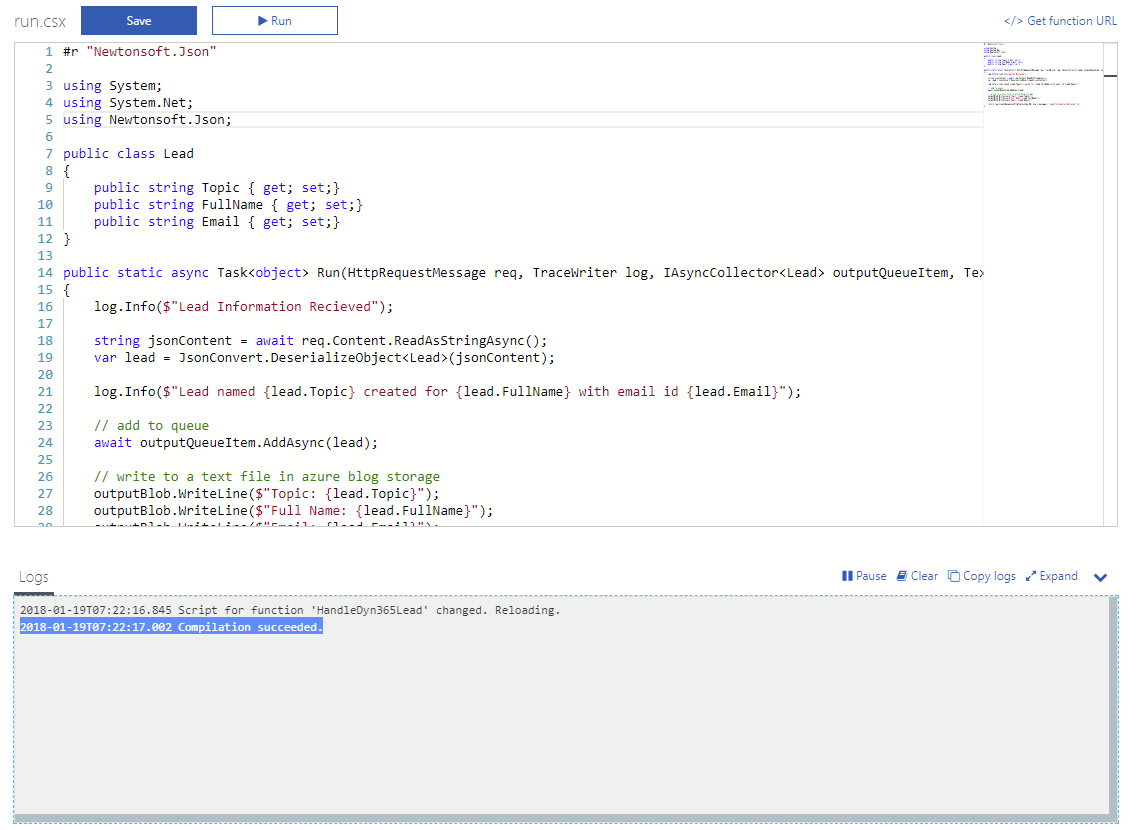

Navigate back to your SendBirthdayGreetingFunction function and replace the default Function code with the following code snippet.

Note that code is executing a Custom Action named dyn_SendBIrthdayGreetings.It also uses the TargetOrganization connection string when accessing Microsoft Dynamics 365 API.

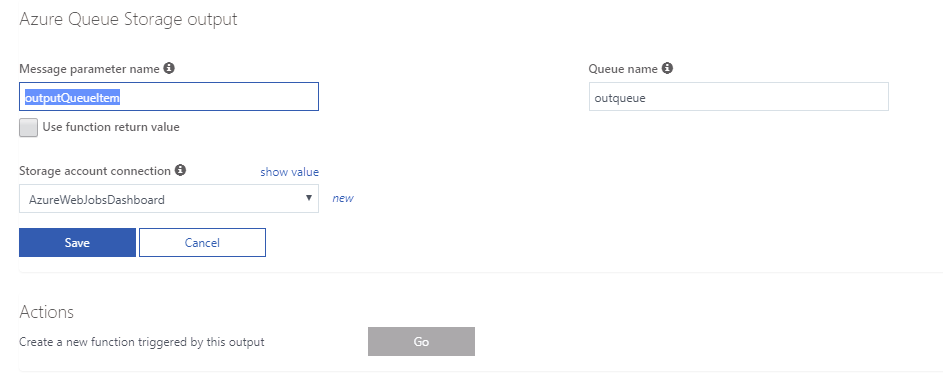

using System.Net; using System.Configuration; using Microsoft.Xrm.Sdk; using Microsoft.Xrm.Sdk.Client; using Microsoft.Xrm.Tooling.Connector; public static HttpResponseMessage Run(HttpRequestMessage req, TraceWriter log) { string actionResponse = string.Empty; //define Dynamics Custom target Action name string actionName = "dyn_SendBirthdayGreetings"; log.Info("Processing new SendBirthdayGreetingFunction request"); //init Dynamics connection, authenticate and get referent to the Organization Service IOrganizationService organizationService = InitDynamicsConnection(); //execute Custom Action OrganizationRequest sendBirthdayGreetingsReq = new OrganizationRequest(actionName); OrganizationResponse sendBirthdayGreetingsRes = (OrganizationResponse)organizationService.Execute(sendBirthdayGreetingsReq); //return completion status response return actionResponse == null ? req.CreateResponse(HttpStatusCode.BadRequest, "An error occured") : req.CreateResponse(HttpStatusCode.OK, "Action completed successfully"); } //init Dynamics connection private static IOrganizationService InitDynamicsConnection() { IOrganizationService result = null; ServicePointManager.SecurityProtocol = SecurityProtocolType.Tls11 | SecurityProtocolType.Tls12; CrmServiceClient client = new CrmServiceClient( ConfigurationManager.ConnectionStrings["TargetOrganization"].ConnectionString); result = client.OrganizationServiceProxy; return result; }Navigate to the Function Integrate area and set the Mode to standard. This will enable the consumption of the function using HTTP GET method.

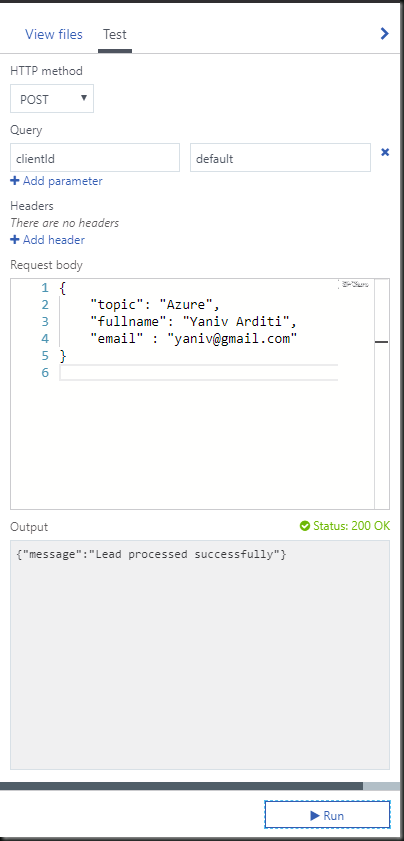

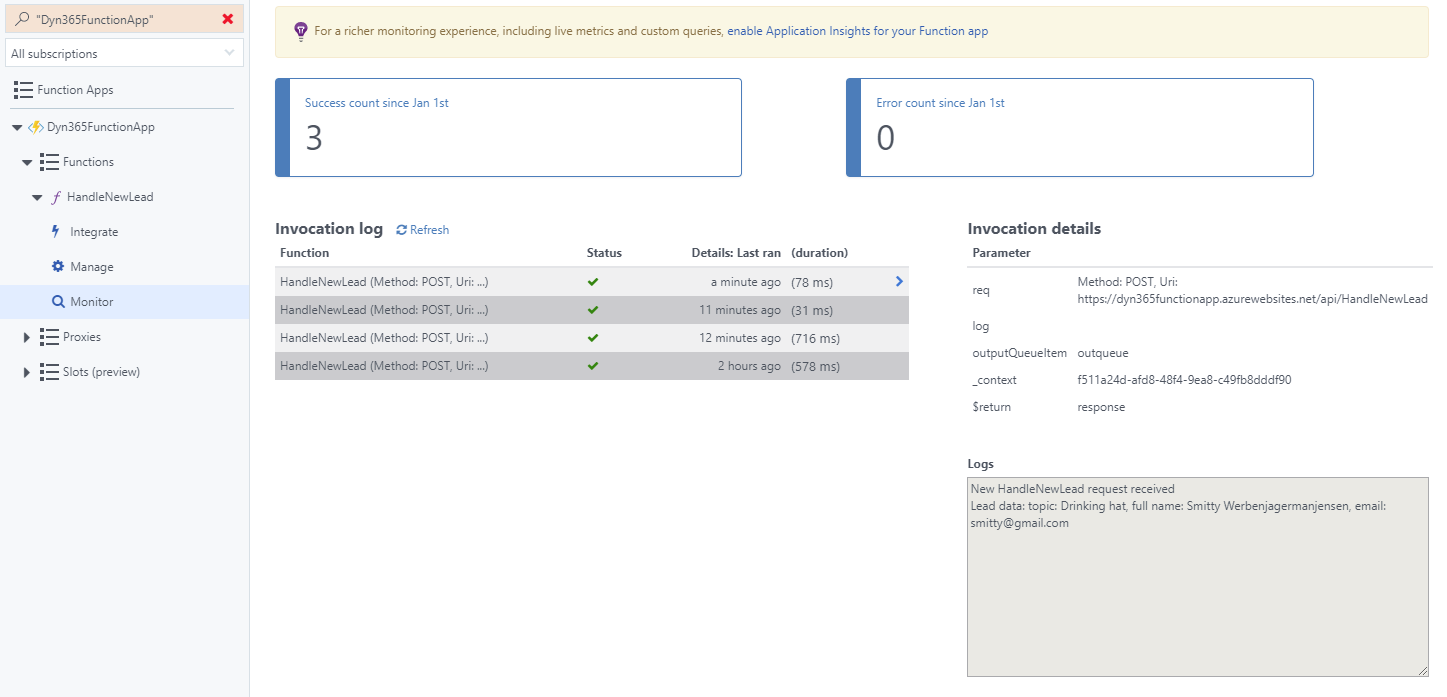

Back at your Function code editor, expand the collapsed Test pane and set the HTTP method to GET. Click Run to test your function. If all went well, a success message will be returned.

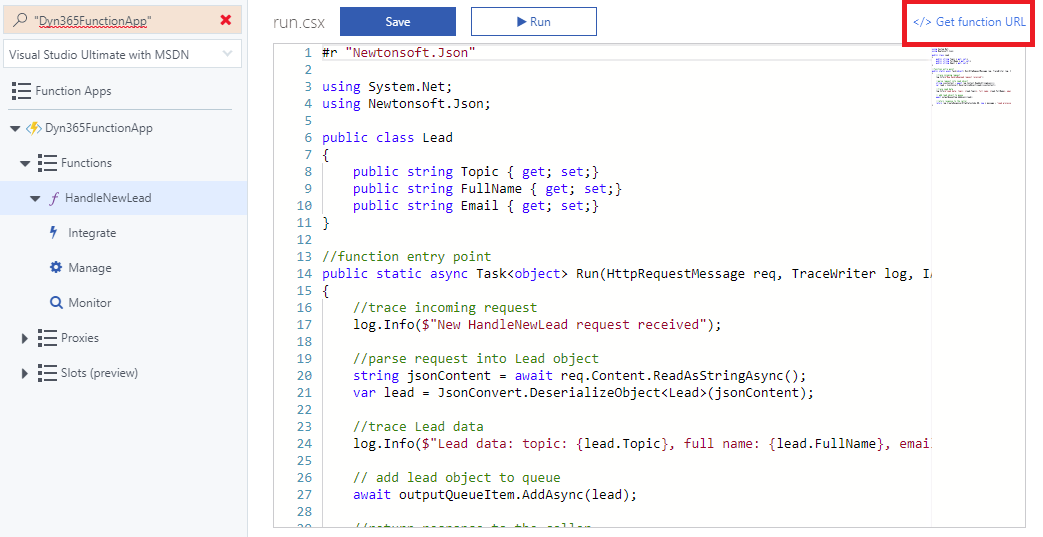

Finally, click the </> Get function URL button on top and copy your function endpoint address.

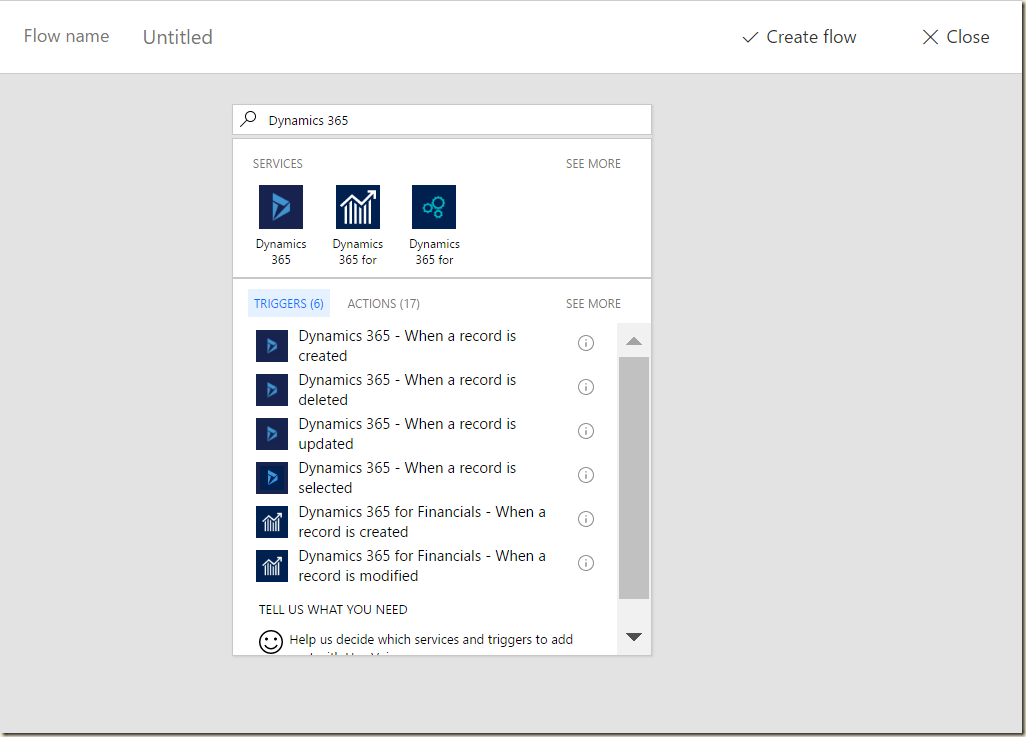

- Define BirthdayGreetingSchduler Azure Scheduler

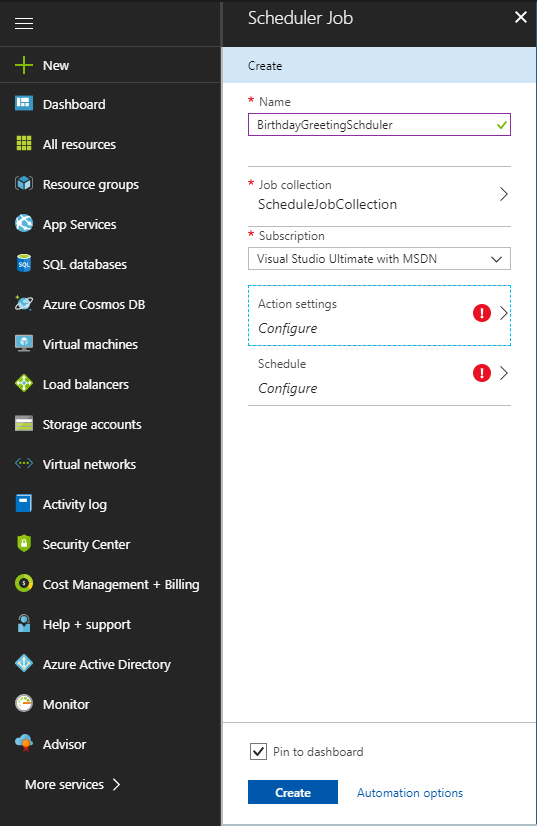

Click the + New button on the left menu, type in Scheduler and select the Scheduler element returned. Click Create

Define the scheduler name to BirthdayGreetingSchduler.

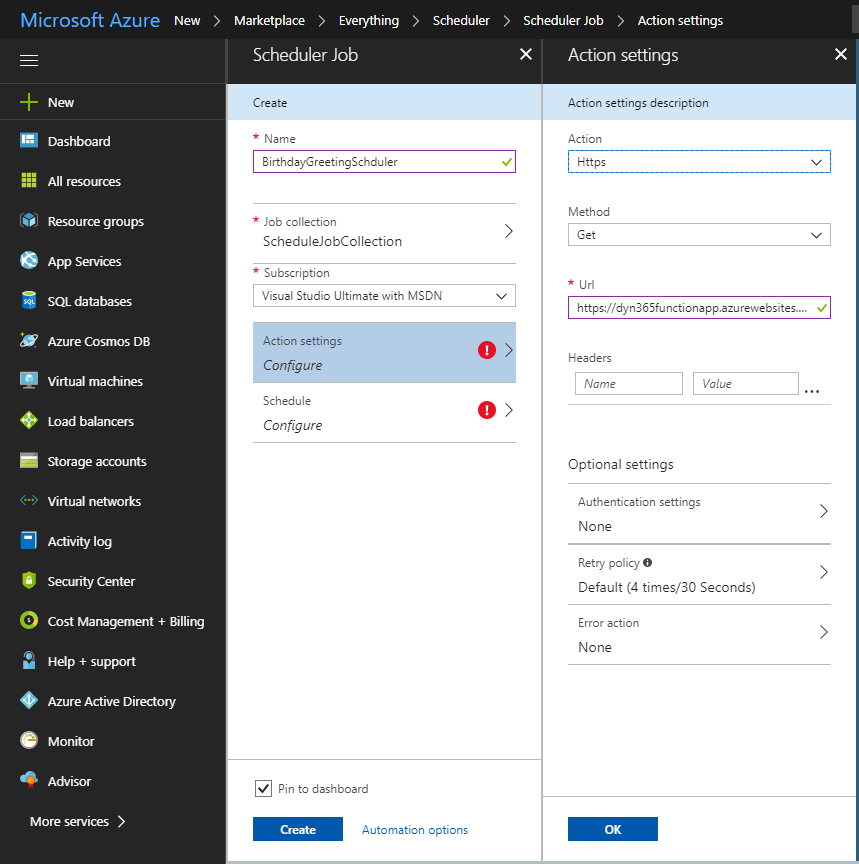

Click the Action settings tile. Set the Action to Https, method to Get and paste in the Function URL you copied at the end of the step 2 above. Click OK

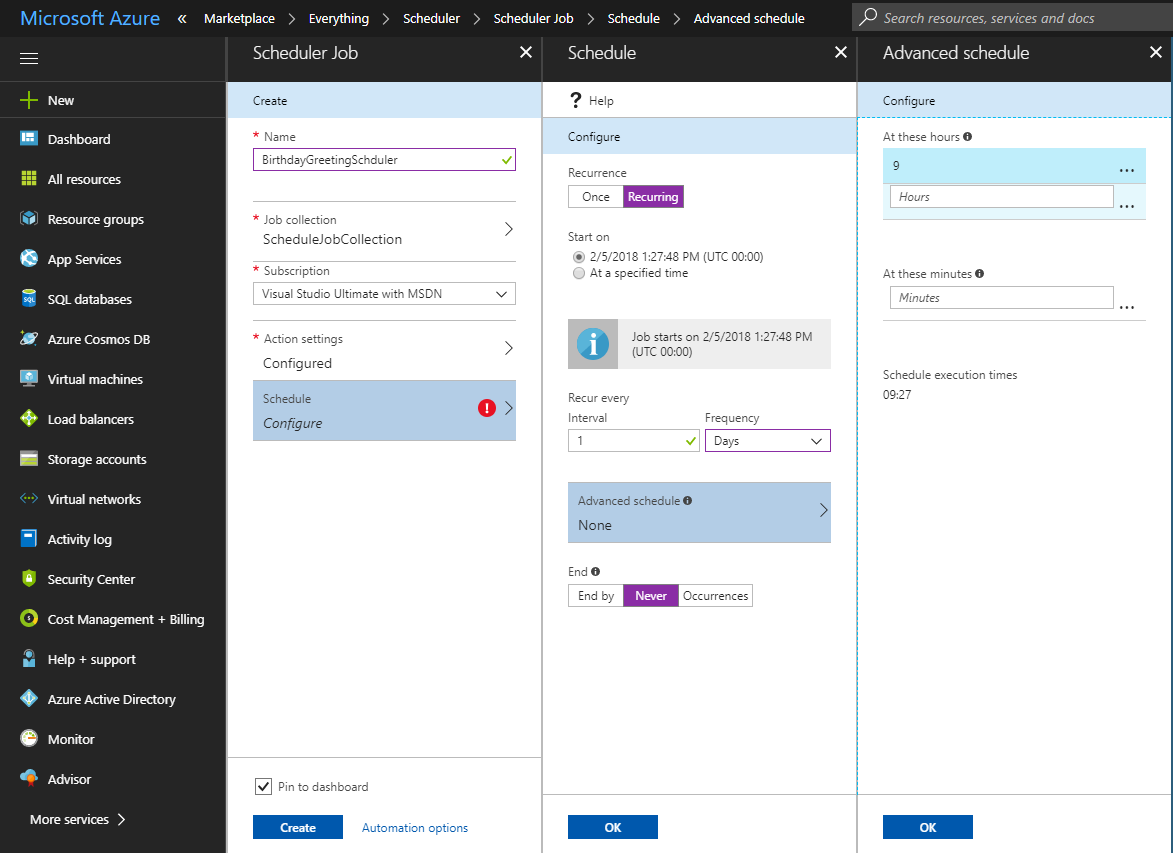

Click the Schedule tile and set the schedule as Recurring, frequency to Days and End to Never. Click the Advanced schedule pane and set 9 in the Hours text box. Click Ok.

This will trigger your function every day at 9:00 for unlimited number of times.

Check Pin to dashboard and click Create.After a few seconds, you will be navigated to the Scheduler management area.

- Test

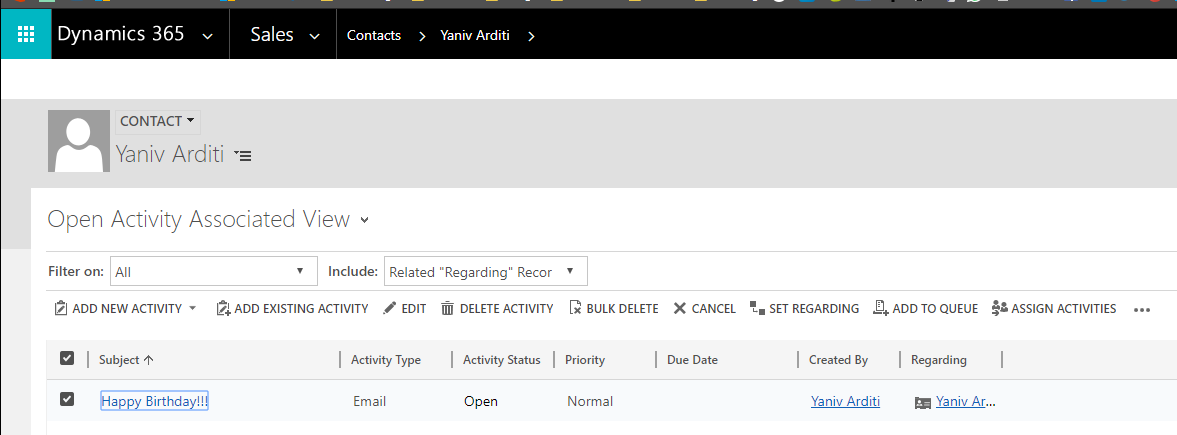

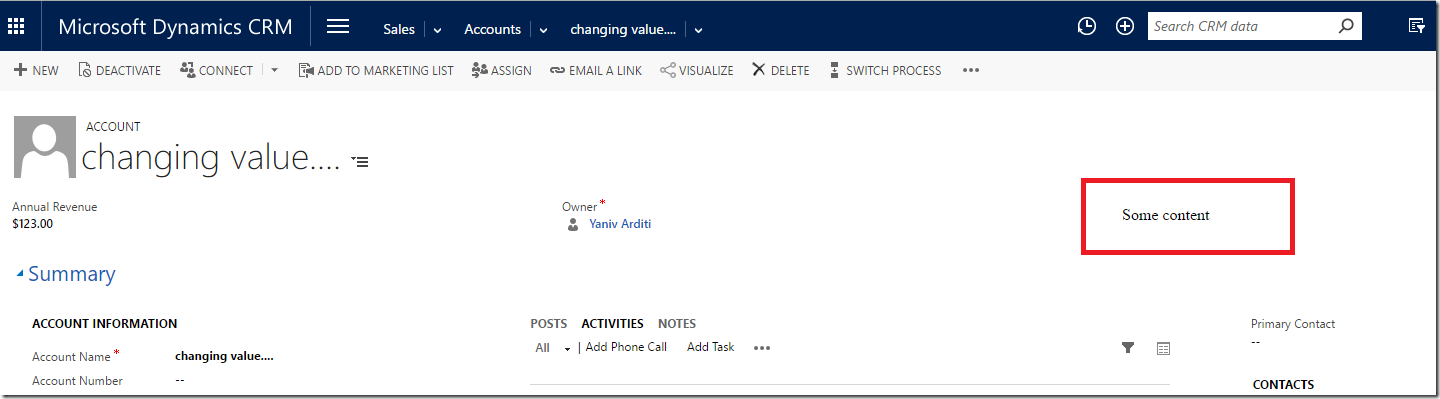

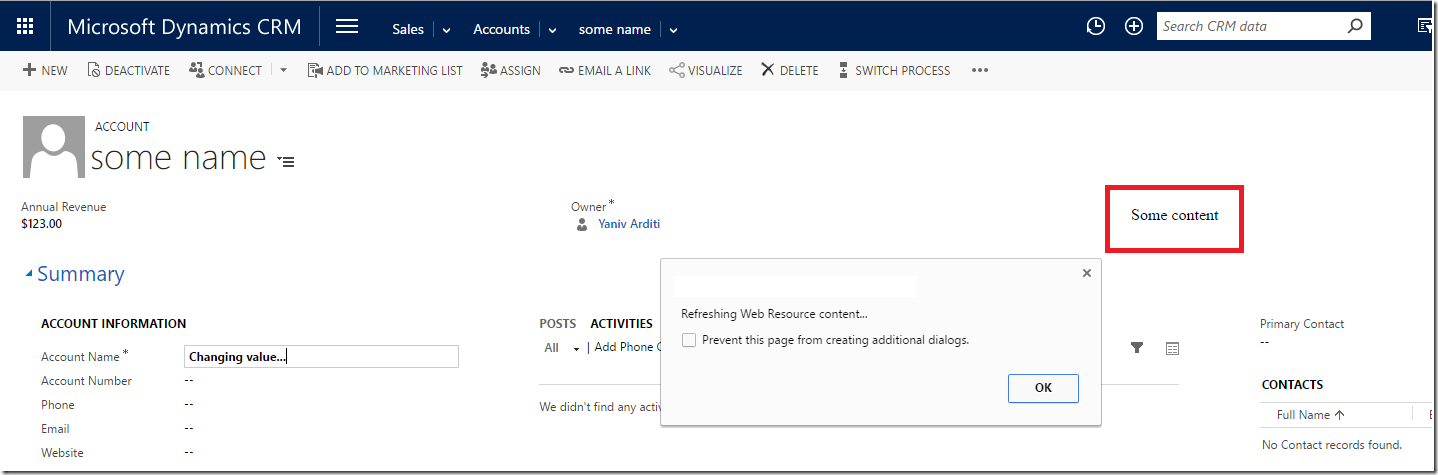

To test the complete solution, update a Microsoft Dynamics 365 test Contact record birthdate to match the day and month of the current date.

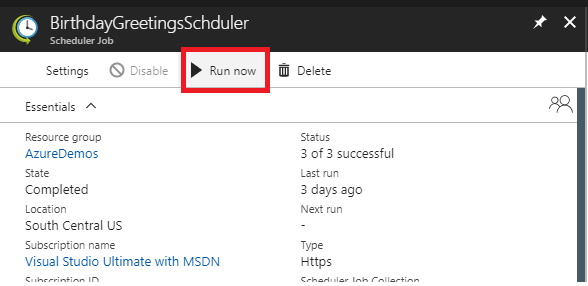

Go back to your Scheduler Job and click Run now.

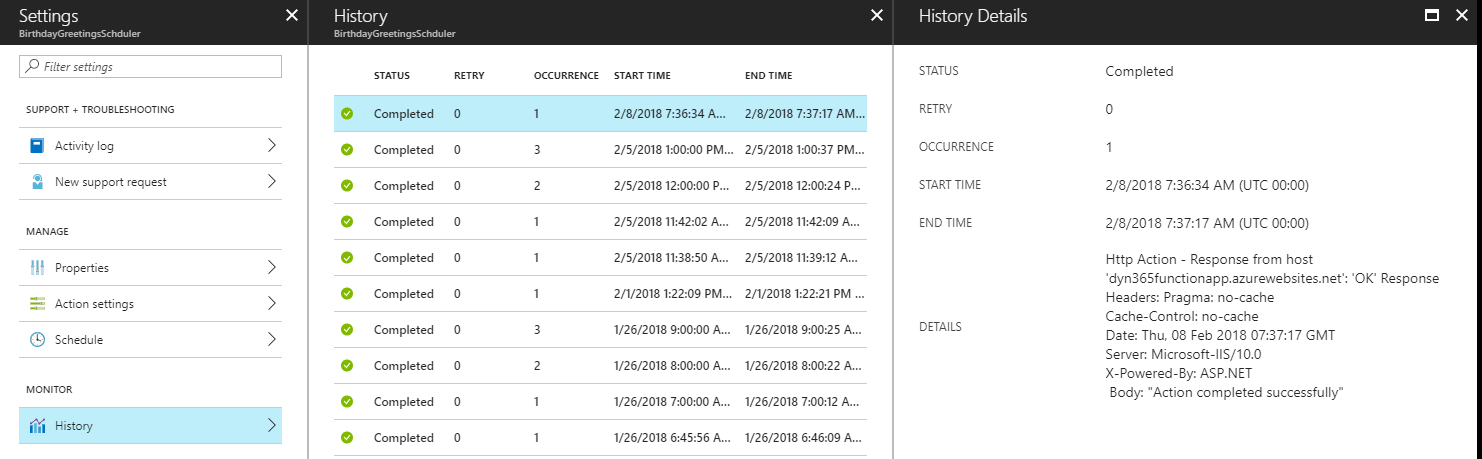

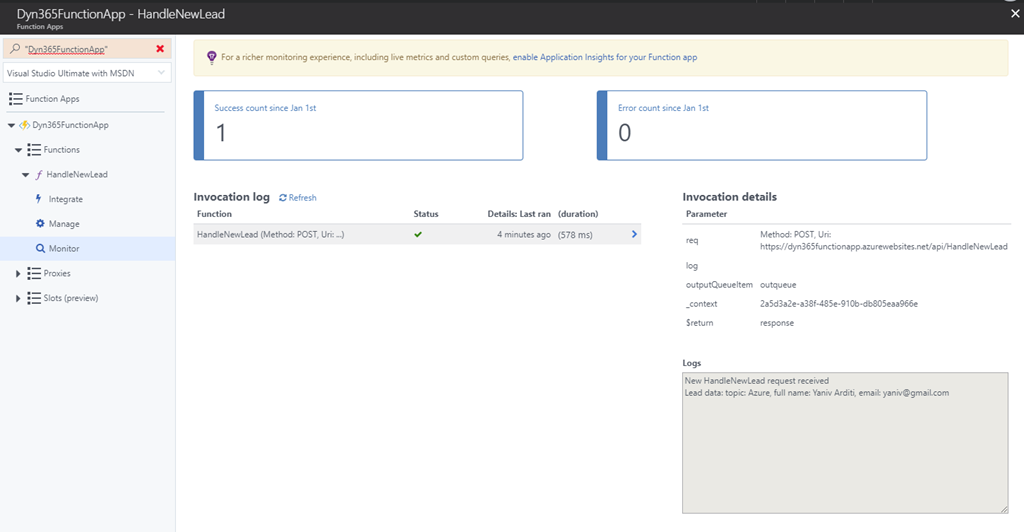

Clicking the History tab, you can monitor the Schedule job completion status

Refreshing the Sample Contact activities list, you should be able to view the newly created Birthday greeting email